Only released in EOL distros:

Package Summary

This stack provides software that can recover articulation models given 3D or 6D pose trajectories.

The articulation_msgs package defines messages and services for exchanging trajectories and kinematic models.

The articulation_models package provides several nodes for fitting and selecting kinematic models for articulated objects. We employ maximum-likelihood sample consensus (MLESAC) for robustly estimating the kinematic parameters, and the Bayesian information criterion (BIC) for selecting between alternative model classes. The learned model assigns likelihoods to observed trajectories, predict the latent configuration of the articulated object, projects the noisy poses onto the model, predict the Jacobian, etc.

The articulation_structure package provides a service for fitting and selecting the kinematic model for articulated objects consisting of more than two parts.

Several tutorials, example launch files and demonstration videos are available in the package articulation_tutorials.

- Author: Juergen Sturm

- License: BSD

- Source: svn http://alufr-ros-pkg.googlecode.com/svn/trunk/articulation

Available Tutorials

All tutorials are located in the package articulation_tutorials.

- Learning Kinematic Models for Articulated Objects using a Webcam

This tutorial is a step-by-step guide that instructs users how to learn kinematic models of articulated objects only by using a webcam and a laptop.

- Getting started with Articulation Models

This tutorial guides you step-by-step through the available tools for fitting, selecting and displaying kinematic trajectories of articulated objects. We will start with preparing a text file containing a kinematic trajectory. We will use an existing script to publish this trajectory, and use the existing model fitting and selection node to estimate a suitable model. We will then visualize this model in RVIZ.

- Using the Articulation Models (Python)

In this tutorial, you will create a simple python script that calls ROS services for model fitting and selection. The script will output the estimated model class (like rotational, prismatic, etc.) and the estimated model parameters (like radius of rotation, etc.).

- Using the Articulation Model Library (C++)

This tutorial demonstrates how to use the articulation model library directly in your programs. This is more efficient than sending ROS messages or ROS services. In this tutorial, a short program is presented that creates an artificial trajectory of an object rotating around a hinge, and then uses the model fitting library to recover the rotational center and radius. Further, the sampled trajectory and the fitted model are publishes as a ROS message for visualization in RVIZ.

- Learning Kinematic Models from End-Effector Trajectories

This tutorial demonstrates the process of model fitting and model selection to real data recorded by a mobile manipulation robot operating various doors and drawers.

Introduction

The main packages in the articulation models stack are:

the articulation_msgs package, which contains the message and service declarations,

the articulation_models package, which contains the core components like model fitting and model selection,

the articulation_rviz_plugin package, which contains two visualization plugins for RVIZ. This can be used to inspect in real-time and full 3D the current observation sequence and monitor the progress of learning,

the articulation_structure package, which provides a service for finding the kinematic structure of an articulated object consisting of more than 2 parts, and

the articulation_tutorials package, which contains several tutorials for learning articulation models using command-line tools, C++ and Python, as well as a out-of-the-box demo using checkerboard markers and a webcam.

These packages allow to fit various models to 6D trajectories. At the moment, the following models (and estimators) are implemented:

- rigid

- prismatic (linear joint)

- rotational (rotary joint)

- PCA-GP (non-linear, non-parametric)

Furthermore, a model selection algorithm based on BIC (Bayesian Information Criterion) is available, see the C++ library implemented in articulation_models. For interaction from/to python, you can use one of the two ROS interfaces:

- articulation_models/model_learner_msg subscribes to articulation_msgs/TrackMsg messages, and publishes articulation_msgs/ModelMsg messages. You can use the simple_publisher.py script to read 3D/6D trajectories from text files and publish them as articulation_msgs/TrackMsg-messages.

- articulation_models/model_learner_srv offers services for model fitting and model selection.

Demonstration

To get a feeling for what articulation models are good for, please have a look at the demo launch files in the demo_fitting/launch-folder of the articulation_tutorials package. These files contain real data recorded by Advait Jain using the Cody robot in Charlie Kemp's lab at Georgia Tech. For compilation, simply type

rosmake articulation_tutorials

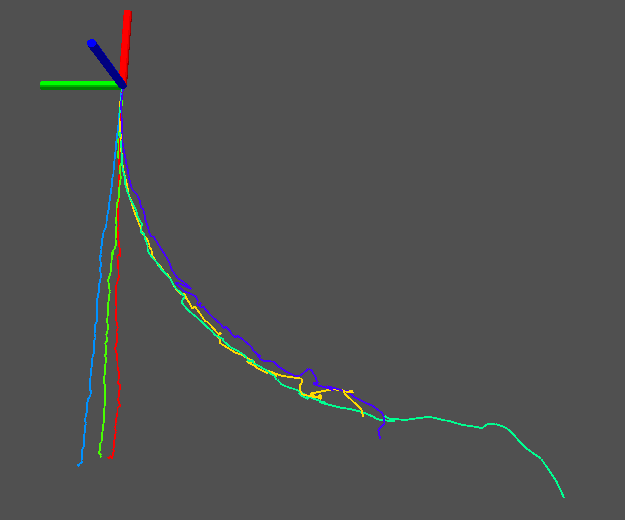

Visualize tracks

This demo will play the log files from the demo_fitting/data package using the simple_publisher.py script. The visualization plugin for RVIZ will be automatically loaded and subscribe to the articulation_msgs/TrackMsg-messages. Each trajectory is visualized in a different color.

roslaunch articulation_tutorials visualize_tracks.launch

|

Visualization of the published tracks in RVIZ using the articulation_models plugin. |

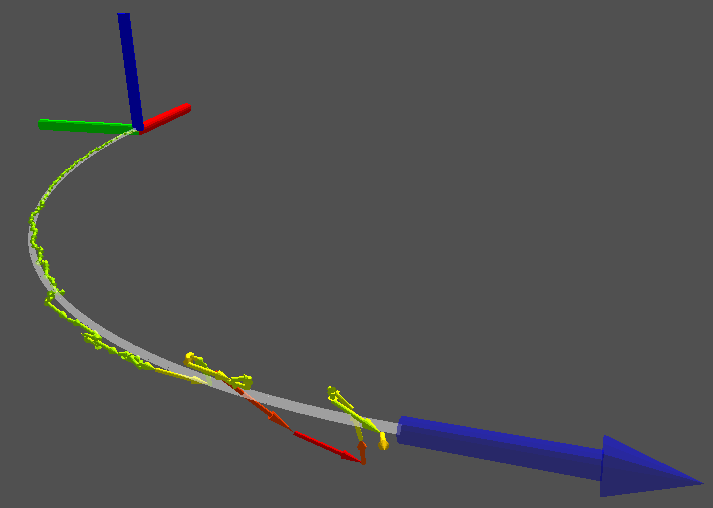

Fit, select and visualize models for tracks

roslaunch demo_fitting fit_models.xml

|

Result after model fitting and selection, as visualized by the articulation_models RVIZ plugin. The white trajectory shows the ideal trajectory from the model, over its observed (latent) configuration range. The observation sequence is visualized by a trajectory of small arrows. The color indicates their likelihood: green means very likely, red means unlikely/outlier. |

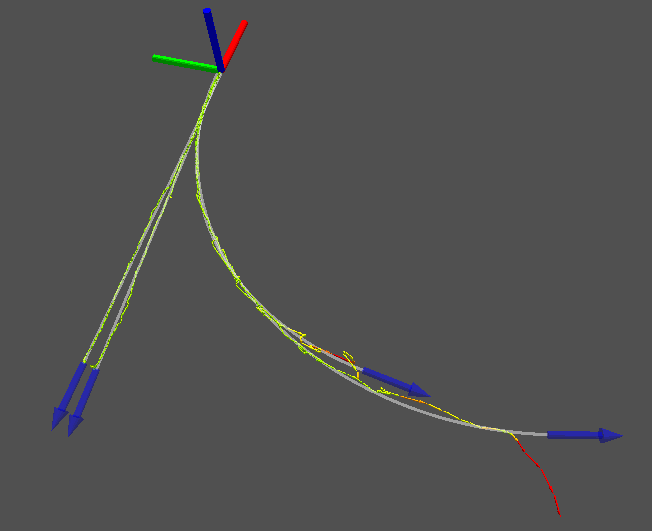

|

Resulting models for 6 different trajectories. The three trajectories on the left stem from data gathered while opening a drawer, the three trajectories on the right stem from a cabinet door. |

References

Jürgen Sturm, Advait Jain, Cyrill Stachniss, Charlie Kemp, Wolfram Burgard. Operating Articulated Objects Based on Experience. In Proc. of the International Conference on Intelligent Robot Systems (IROS), Anchorage, USA, 2010. [ pdf ] [ bibtex ]

Jürgen Sturm, Kurt Konolige, Cyrill Stachniss, Wolfram Burgard. Vision-based Detection for Learning Articulation Models of Cabinet Doors and Drawers in Household Environments. In Proc. of the IEEE International Conference on Robotics and Automation (ICRA), Anchorage, USA, 2010. [ pdf ] [ bibtex ]

Jürgen Sturm, Cyrill Stachniss, Vijay Pradeep, Christian Plagemann, Kurt Konolige, Wolfram Burgard. Learning Kinematic Models for Articulated Objects. In Proc. of the International Joint Conference on Artificial Intelligence (IJCAI), Pasadena, USA, 2009. [ pdf ] [ bibtex ]

More Information

More information (including videos, papers, presentations) can be found on the homepage of Juergen Sturm.

Report a Bug

If you run into any problems, please feel free to contact Juergen Sturm <juergen.sturm@in.tum.de>.