Only released in EOL distros:

Package Summary

This package implements a real-time active 3D scene segmentation. It is based on an implementation of Belief Propagation on the GPU.

- Author: Marten Bjorkman. Maintained by Jeannette Bohg

- License: BSD

- Repository: wg-ros-pkg

- Source: svn https://code.ros.org/svn/wg-ros-pkg/stacks/tabletop_object_perception/tags/tabletop_object_perception-0.4.3

Package Summary

This package implements a real-time active 3D scene segmentation. It is based on an implementation of Belief Propagation on the GPU. Example code for segmenting offline images and of images being published on ros topics is available.

- Author: Mårten Björkman. Maintained by Jeannette Bohg

- License: BSD

- Source: svn https://code.ros.org/svn/wg-ros-pkg/stacks/pr2_object_manipulation/branches/0.5-branch

pr2_object_manipulation: active_realtime_segmentation | fast_plane_detection | manipulation_worlds | object_recognition_gui | object_segmentation_gui | pick_and_place_demo_app | pr2_create_object_model | pr2_grasp_adjust | pr2_gripper_grasp_controller | pr2_gripper_grasp_planner_cluster | pr2_gripper_reactive_approach | pr2_gripper_sensor_action | pr2_gripper_sensor_controller | pr2_gripper_sensor_msgs | pr2_handy_tools | pr2_interactive_gripper_pose_action | pr2_interactive_manipulation | pr2_interactive_object_detection | pr2_manipulation_controllers | pr2_marker_control | pr2_navigation_controllers | pr2_object_manipulation_launch | pr2_object_manipulation_msgs | pr2_pick_and_place_demos | pr2_pick_and_place_tutorial | pr2_tabletop_manipulation_launch | pr2_wrappers | rgbd_assembler | robot_self_filter_color | segmented_clutter_grasp_planner | tabletop_collision_map_processing | tabletop_object_detector | tf_throttle

Package Summary

This package implements a real-time active 3D scene segmentation. It is based on an implementation of Belief Propagation on the GPU. Example code for segmenting offline images and of images being published on ros topics is available.

- Author: Mårten Björkman. Maintained by Jeannette Bohg

- License: BSD

- Source: svn https://code.ros.org/svn/wg-ros-pkg/stacks/pr2_object_manipulation/branches/0.6-branch

Package Summary

This package implements a real-time active 3D scene segmentation. It is based on an implementation of Belief Propagation on the GPU. Example code for segmenting offline images and of images being published on ros topics is available.

- Author: Mårten Björkman. Maintained by Jeannette Bohg

- License: BSD

- Source: svn https://code.ros.org/svn/wg-ros-pkg/stacks/pr2_object_manipulation/trunk

References

The active realtime segmenter was developed by Mårten Björkman from KTH Stockholm (Sweden). The details of the method are explained in the following papers:

M. Björkman and D. Kragic, Active 3D Segmentation through Fixation of Previously Unseen Objects,

in British Machine Vision Conference (BMVC), Aberystwyth, UK, September 2010.M. Björkman, D. Kragic, Active 3D scene segmentation and detection of unknown objects, in IEEE International Conference on Robotics and Automation, Anchorage, USA, 2010.

Summary of the Segmentation Method

Instead of just relying on two possible hypothesis, figure and ground, the segmentation method implemented in this package adds a third hypothesis, a flat surface. It is assumed that most objects of interest are placed on flat surfaces somewhere in the scene. This simplifies the problem of segregating an object from the surface it stands on, when both are very similar in appearance.

The segmentation approach is an iterative two-stage method that first performs pixel-wise labeling using a set of model parameters and then updates these parameters in the second stage. As such the method is similar to Expectation-Maximization with the distinction that instead of enumerating over all combinations of labellings, which in case of dependencies between neighboring pixels becomes prohibitively many, model evidence is summed up on a per-pixel basis using marginal distributions of labels obtained using belief propagation.

The most critical phase of any iterative scheme for figure-ground segmentation is initialization. There has to be some prior assumption of what is likely to belong to the foreground. In the original system to which the segmentation system has been originally applied (humanoid head from ARMAR III), we have a fixating system and assume that points close to the center of fixation are most likely to be part of the foreground. An imaginary 3D ball is placed around this fixation point and everything within the ball is initially labeled as foreground. For the flat surface hypothesis we apply RANSAC to find the most likely plane. The remaining points are initially labeled as background points.

Other solutions are to use e.g. attention. On the PR2, an interactive approach has been chosen for initialising the object hypotheses through an rviz plugin implemented in the package object_segmentation_gui.

Using the Active Segmenter

An example ros node is contained in the package.

src/dosegment_node.cpp

This specific node reads out an RGB image and a disparity image and segments it iteratively. The main API functions are used and documented. Specifically, the function to convert a ros image into a 16bit aligned image suitable for the GPU computation is used. In this case, only one object is added as foreground hypothesis. In the current implementation, 6 objects can be segmented simultaneously. Furthermore, to obtain coloured images from the PR2 narrow stereo cameras, the package rgbd_assembler can be used.

Furthermore, offline data is provided with the package on which the segmentation can be tested. It was collected with the vision system running on the humanoid head from ARMAR III. To get the data, uncomment the following lines at the botom of the CMakeLists.txt file.

# Demo datasets rosbuild_download_test_data (http://www.csc.kth.se/~bohg/testImgDisp.tgz demo/testImgDisp.tgz) rosbuild_untar_file (demo/testImgDisp.tgz demo/data/ ALL)

The code in

src/dosegment.cpp

can then be run to test the segmentation on this data. This example code is fully documented and similar to the previous example code.

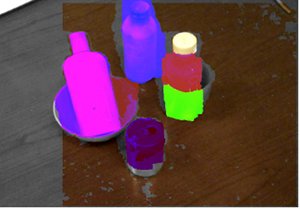

Example Output

Here are example results from the offline testing data that was collected with the ARMAR III humanoid head. Since we have a fixating system, the initial point around which the forground is initilised is in the center of the image.

On the left, we have one example from Kinect data using the package object_segmentation_gui to initialise object hypotheses from human input. On the right side, RGB-D data from the PR2 vision system has been assembled with the package rgbd_assembler. Initial object hypotheses have been again selected using human input through the object_segmentation_gui. The original segmentation method has been extended to deal with regions in the image that only have grey scale information.