Only released in EOL distros:

Package Summary

Populates and manages a collision environment using the results of sensor processing from the tabletop_object_detector. Functionality includes adding and removing detected objects from the collision environment, and requesting static collision maps for obstacle avoidance.

- Author: Matei Ciocarlie

- License: BSD

- Repository: wg-ros-pkg

- Source: svn https://code.ros.org/svn/wg-ros-pkg/stacks/tabletop_object_perception/tags/tabletop_object_perception-0.4.3

Package Summary

Populates and manages a collision environment using the results of sensor processing from the tabletop_object_detector. Functionality includes adding and removing detected objects from the collision environment, and requesting static collision maps for obstacle avoidance.

- Author: Matei Ciocarlie

- License: BSD

- Source: svn https://code.ros.org/svn/wg-ros-pkg/stacks/pr2_object_manipulation/branches/0.5-branch

pr2_object_manipulation: active_realtime_segmentation | fast_plane_detection | manipulation_worlds | object_recognition_gui | object_segmentation_gui | pick_and_place_demo_app | pr2_create_object_model | pr2_grasp_adjust | pr2_gripper_grasp_controller | pr2_gripper_grasp_planner_cluster | pr2_gripper_reactive_approach | pr2_gripper_sensor_action | pr2_gripper_sensor_controller | pr2_gripper_sensor_msgs | pr2_handy_tools | pr2_interactive_gripper_pose_action | pr2_interactive_manipulation | pr2_interactive_object_detection | pr2_manipulation_controllers | pr2_marker_control | pr2_navigation_controllers | pr2_object_manipulation_launch | pr2_object_manipulation_msgs | pr2_pick_and_place_demos | pr2_pick_and_place_tutorial | pr2_tabletop_manipulation_launch | pr2_wrappers | rgbd_assembler | robot_self_filter_color | segmented_clutter_grasp_planner | tabletop_collision_map_processing | tabletop_object_detector | tf_throttle

Package Summary

Populates and manages a collision environment using the results of sensor processing from the tabletop_object_detector. Functionality includes adding and removing detected objects from the collision environment, and requesting static collision maps for obstacle avoidance.

- Author: Matei Ciocarlie

- License: BSD

- Source: svn https://code.ros.org/svn/wg-ros-pkg/stacks/pr2_object_manipulation/branches/0.6-branch

Package Summary

Populates and manages a collision environment using the results of sensor processing from the tabletop_object_detector. Functionality includes adding and removing detected objects from the collision environment, and requesting static collision maps for obstacle avoidance.

- Author: Matei Ciocarlie

- License: BSD

- Source: git https://github.com/ros-interactive-manipulation/pr2_object_manipulation.git (branch: groovy-devel)

Collision Map Processing

This stack has two main roles:

add the results of the tabletop_object_detector to the collision environment

- once added to the collision environment, an object gets a collision name which can be used later to reason about collision with that particular object.

- recognized objects (from the database of models) get added to the collision environment as triangular meshes

- unknown objects (segmented point clouds) get added to the collision map as bounding boxes

- (optional) the table gets added to the collision map as a box

once added to the collision environment, an object (known or unknown) also gets repackaged as a GraspableObject, which can be provided as input to a Pickup or Place request sent to the object_manipulator.

- once added to the collision environment, an object gets a collision name which can be used later to reason about collision with that particular object.

- acquire static collision maps using the tilting laser

- the tilting laser is used to build an image of the environment beyond what is seen by the narrow stereo cameras

- a complete laser scan is used to build a static collision map of the environment, which can be used for motion planning and collision avoidance

- any objects present in the collision environment (such as the robot itself, or detected objects added in the previous step) are filtered out of the static collision map.

- the static collision map can the thought of as containing the obstacles in the environment that we have no information on, except that they should not be collided with.

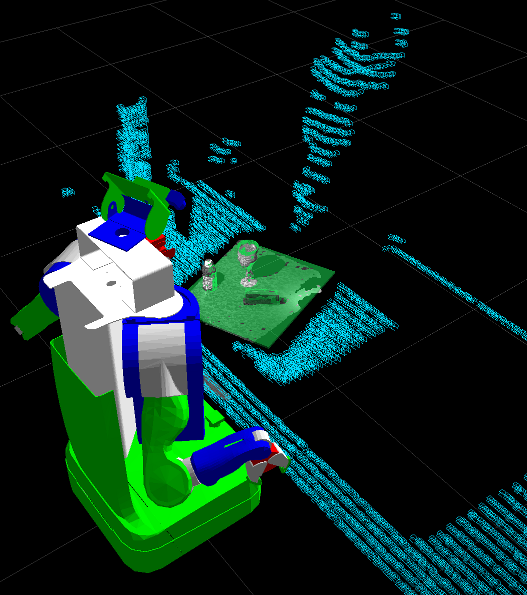

Image of the Collision Map. Note the points from the tilting laser (in cyan) and the explicitly recognized objects (in green)

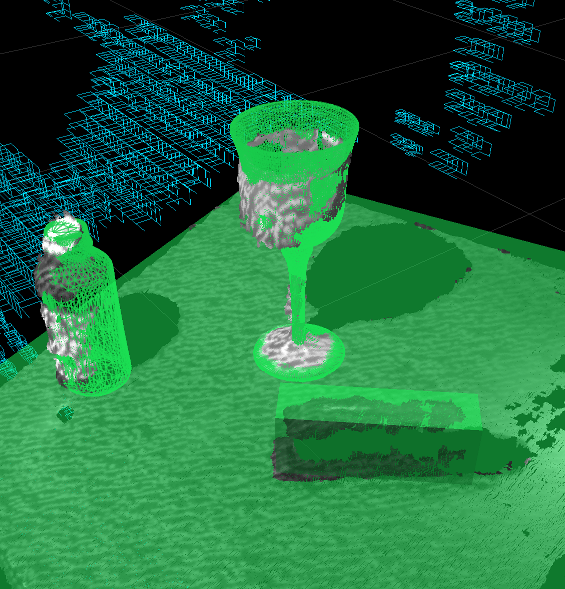

Detail of the Collision Map. Note that the recognized objects have been added as triangle meshes, while the unrecognized object has been added as a bounding box.

ROS API

This package provides a single service, TabletopCollisionMapProcessing, which performs all of the above tasks.

Running the Manipulation Pipeline

To launch the manipulation pipeline, complete with the sensor processing provided here, and execute pickup and place tasks using the PR2 robot, tutorials and launch files are provided in pr2_tabletop_manipulation_apps.